Python và Lalicat: Công cụ cơ bản để quét web an toàn và hiệu quả

What is web scraping? How does it work?

Web scraping is a highly proficient and automated methodology employed to amass data from websites such as Reddit. This technique relies on computer programs, commonly referred to as web crawlers or spiders, which extract textual content, visuals, hyperlinks, and other valuable data from web pages. Diverse websites and data requests necessitate distinct web scraping methodologies. Acquiring data from certain websites that furnish structured data via APIs is comparatively straightforward, whereas others require the intricate process of parsing HTML code for data aggregation, rendering it more arduous.

It is noteworthy to mention that computer languages and tools like Python, R, and Selenium rank among the extensively utilized resources for web scraping. By utilizing these techniques, web scrapers automate the act of browsing websites, completing forms, and extracting data. This automated approach not only economizes time and exertion but also heightens the precision and dependability of data accumulation. Consequently, web scraping technology assumes an indispensable and significant role in contemporary data analysis and research endeavors.

Benefits of web scraping

Web scraping technology assumes an indispensable role as a paramount instrument, empowering businesses, individuals, and academia to expeditiously and proficiently amass data from the vast expanse of the Internet. In an era marked by an incessantly expanding volume of online information, web scraping has emerged as a pivotal approach for the systematic acquisition and meticulous evaluation of data.

Web scraping manifests itself in several distinct and targeted applications, encompassing but not limited to market research, lead generation, content aggregation, and data analysis. Market research capitalizes on web scraping to assemble invaluable market data and glean competitive intelligence, including pricing insights, product appraisals, and customer sentiment analysis. Conversely, lead generation harnesses the potential of web scraping through Python to procure contact particulars such as email addresses and phone numbers from websites. In terms of content aggregation, web scraping serves as an adept tool to collate a multifarious array of content sources, ranging from news articles and social media posts to blog entries, thereby constructing a comprehensive repository centered around a specific subject matter. Moreover, when applied to data analysis, web scraping empowers researchers and analysts to accumulate and scrutinize data for diverse purposes, such as studying consumer behavior, monitoring trends, and conducting sentiment analysis.

At its core, web scraping stands as an influential force that expedites decision-making processes, furnishes illuminating data insights, and curtails the duration of research endeavors. Nevertheless, it is imperative to exercise ethical and responsible practices when employing web scraping techniques. Adhering to the terms of service stipulated by the websites being crawled is paramount, ensuring the legal and ethical utilization of data while safeguarding individual privacy rights.

What is Lalicat? How does it assist users?

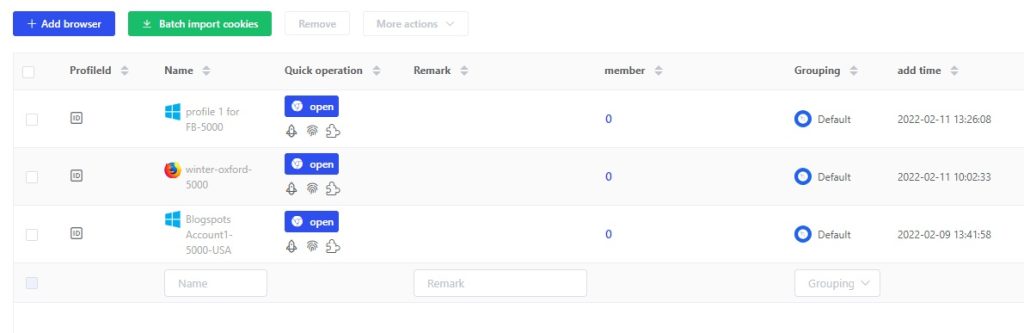

Lalicat serves as a highly secure browsing platform tailored for the proficient management of diverse online identities. Designed specifically for web developers, Lalicat acts as a shield, safeguarding their web crawlers from detection by sophisticated platforms including Facebook, Google, Cloudflare, and others. Offering users an enclosed and confidential digital environment, Lalicat enables seamless web browsing experiences, encompassing the creation and management of multiple browser accounts, as well as the automation of web scraping operations. Within Lalicat's realm, users can effortlessly establish and maintain multiple configuration files, each endowed with its unique array of parameters that remain distinct and non-intersecting. This unique capability empowers users to effortlessly access multiple accounts on a single website without arousing suspicion. Notably, even behemoths of tracking prowess like Meta and Amazon find themselves incapable of distinguishing users operating within the Lalicat ecosystem, as they are perceived as ordinary Chrome users. This paradigm shift proves transformative for businesses and individuals necessitating the management of numerous social media, advertising, or e-commerce accounts.

Moreover, beyond its identity management prowess, Lalicat showcases heightened scraping capabilities, enabling users to employ pre-built or custom scrapers for data acquisition from websites without the risk of being banned. This attribute emerges as a crucial and invaluable asset for businesses and researchers seeking to gather data for market research, competitive analysis, and other scholarly pursuits. Lalicat's scraping feature provides users with a hassle-free means of collecting data from targeted websites without the looming specter of detection, bans, or other forms of restriction. This, in turn, optimizes the efficiency and precision of data collection, bolstering the quality and effectiveness of market analysis and research endeavors.

In essence, Lalicat emerges as an exceptionally practical and user-friendly tool that streamlines the management of diverse online identities while elevating the art of web scraping. By enabling seamless multi-account management and equipping users with a robust scraping mechanism shielded from detection and limitations, Lalicat catalyzes productivity enhancements and fortifies data integrity. Moreover, its comprehensive suite of features, encompassing private browsing, automated web scraping, and the creation and administration of multiple browser accounts, positions Lalicat as a comprehensive and secure browsing solution. For enterprises and researchers reliant on the acquisition of web data, Lalicat offers an efficient, reliable, and secure tool capable of elevating the efficiency and precision of data collection and analysis endeavors.

Step by step to set up and use the Lalicat for web scraping

Step One: Initiate Account Creation. Commencing your Lalicat journey necessitates the establishment of an account. This initial step entails visiting the official Lalicat website and engaging in the creation process, where you shall furnish your email address for registration purposes. Once the account is successfully created, you may proceed to log in to the platform and commence the configuration of your browser profiles.

Step 2: Forge Browser Profiles. Lalicat employs browser profiles as distinctive personas that emulate genuine user behavior. Before constructing a profile, you must elect the desired browser, such as Google Chrome or Mozilla Firefox. Subsequently, you can meticulously shape the configuration file by incorporating essential elements like the user agent, thumbprint, and IP address. These features collectively contribute to heightening the authenticity of the profile, effectively diminishing the likelihood of detection.

Step 3: Fine-tune Proxy Settings. To further fortify the veil of stealth, you possess the ability to tailor the proxy settings within your browser profile. This strategic maneuver endows each website visitation with a distinct IP address, thereby impeding diligent monitoring of your online conduct.

Step 4: Embark on Reddit Web Scraping Endeavors. Empowered by the establishment of proxy settings and the meticulous construction of browser profiles, you are now primed to embark on the realm of web scraping. This involves crafting web scraping scripts, typically utilizing a computer language such as Python. These scripts are expressly designed to navigate the Reddit website and efficiently extract the desired data utilizing the browser profile orchestrated by Lalicat. By seamlessly harmonizing these elements, you can effortlessly amass data from Reddit without incurring detection or encountering restrictions.

Tips when carrying out web scraping

If you intend to engage in web scraping activities on Reddit, here are some suggestions and guidelines to ensure that your endeavor adheres to legal, sustainable, and efficient principles:

First and foremost, it is imperative to comply with the site's policy. Before extracting data from a website, thoroughly review its terms of service and privacy policy. Certain websites may explicitly prohibit web scraping or mandate prior authorization for data extraction. Failure to adhere to site policies can result in the suspension of your activity or even legal repercussions.

Secondly, uphold ethical and legal standards. Web scraping raises various ethical and legal concerns, including safeguarding the privacy of individuals whose data is being scraped and complying with data protection laws. Ensure that data is solely obtained for legitimate and ethical purposes, and whenever necessary, obtain consent or anonymize the data. Simultaneously, refrain from misusing scraped data or employing it for unlawful or unethical activities. These precautions ensure that your web scraping practices align with ethical and legal frameworks.

Thirdly, handle errors and exceptions adeptly. Web scraping is prone to encountering errors and exceptions, such as server errors, connection timeouts, and invalid data. It is crucial to handle these issues gracefully by implementing appropriate measures, such as retrying failed requests or logging errors for subsequent analysis. By doing so, you guarantee the efficiency and sustainability of your web scraping campaigns.

Fourthly, utilize a user agent string. A user agent string is a code snippet that identifies a website to web crawlers. Employing a user agent string commonly used by web browsers can help evade detection and prevent websites from obstructing scraping activities. However, be mindful that certain sites can detect user agent strings, so exercise caution and select and utilize them judiciously.

Fifthly, employ a proxy. Proxies serve the purpose of rotating IP addresses and evading detection by websites that may attempt to block scraping activities. Nonetheless, it is essential to utilize a reputable proxy provider and adhere to their terms of service to avoid being banned or encountering legal ramifications.

Lastly, avoid overburdening your server. Web scraping can place significant stress on your website server, so it is crucial to refrain from scraping excessive amounts of data or inundating the server with an excessive number of requests within a short timeframe. Consider incorporating delays between requests or fetching data during off-peak hours to prevent overloading the site. Web scraping is a potent tool for collecting and analyzing internet data; however, it must be executed with care and within legal boundaries. By following the aforementioned tips and best practices, you ensure that your web scraping campaign on Reddit is sustainable, efficient, and conducted in accordance with the law. This not only safeguards your online privacy and security but also enhances the efficacy of data collection and analysis, enabling more precise and comprehensive results for your business and research endeavors.

Conclusion

Web scraping Reddit is a potent mechanism that can furnish invaluable perspectives and knowledge for diverse objectives like market research and competitor analysis. Nonetheless, engaging in web scraping necessitates meticulous planning and the utilization of appropriate tools to ensure conformity to legal and ethical standards, as well as to avert website blocking.

Python stands as a widely acclaimed programming language for web scraping due to its extensive array of libraries and tools, including Beautiful Soup, Scrapy, and Selenium. These libraries facilitate effortless HTML parsing, web browsing automation, and data extraction from websites.

Moreover, Lalicat serves as an exceptional web scraping management tool, designed to oversee multiple online personas and web scraping endeavors. It furnishes a secure and private browsing environment, enables the creation of multiple browser profiles, automates web scraping tasks, and supports integration with proxy servers. These attributes render it an indispensable asset for businesses and individuals requiring the management of multiple online identities and the acquisition of web-based data.

All in all, by employing Python and Lalicat for web scraping, organizations and individuals can enhance their capacity to extract valuable insights and information from the web, with heightened efficiency and adherence to ethical and legal principles. Through the utilization of these tools, one can seamlessly scrape Reddit's data, analyze it, and harness its potential to deliver significant value to business operations or research initiatives.

Consequently, we strongly endorse the utilization of Python and Lalicat for web scraping purposes, accompanied by strict adherence to the aforementioned tips and best practices. To facilitate a smoother initiation into the world of web scraping, you may download Lalicat from the provided link and avail yourself of our free plan to commence secure web scraping endeavors.

dùng thử miễn phí

Chúng tôi cung cấp 3 ngày dùng thử miễn phí cho tất cả người dùng mới

Không có giới hạn về tính năng